Docker machine LXD driver. LXD should make running minikube much simpler and lighter weight. Device downloads. For software and drivers, select your product from the list below. Manuals Warranty.

The Nova LXD project provides a Nova driver for managing full system containers using LXD as part of an OpenStack cloud.

Manual installation - Ubuntu server (Ubuntu 16.04 and newer)¶

Nova LXD is available in Ubuntu 16.04 and newer; The Nova LXD driver is installed on Nova Compute servers only:

The 'nova-lxd' package ensures that the nova-compute daemon is startedwith the correct hypervisor driver for LXD; however the 'nova' user musthave group membership of the 'lxd' group to have access to manage LXDcontainers:

In order to support migration of containers between Compute hosts, LXD must be configured to listen for networkconnections and a trust password must be set:

Each Nova LXD instance within your deployment must then be configured with remotes for all of Nova LXD instances:

Automated deployment using Juju¶

Deploying OpenStack is a complex process, for which a number of deployment tools exist; Juju provides a nice wayto deploy OpenStack on Ubuntu, and a specific bundle of charms can be used to deploy OpenStack cloud using LXD.

The bundle automatically configures storage for containers root filesystems using LVM and sets up appropriate network configuration,trust passwords and remotes to support migration of containers between LXD hypervisors.

LXD images for OpenStack¶

LXD requires use of 'raw' images that are generally to be installed onto a block device, such as a disk partition or an LVM volume. Canonical publishes raw images of Ubuntu for various arches (arm64, armhf, i386, amd64, ppc64el). These can be imported similarly to the following example, that imports an amd64 Ubuntu trusty image:

Creating containers¶

LXD containers are managed in the same manner as KVM containers - either via Horizon or via the Nova CLI:

You may need to associate a floating ip address and configure appropriate security rules, depending on the network andsecurity configuration of the OpenStack cloud you are using.

Network changes in Ubuntu 17.10+

This guide has been updated for netplan, introduced in 17.10. Please test the configuration and let me know if you have any issues with it (easiest via tweet, @jasonbayton).

LXD works perfectly fine with a directory-based storage backend, but both speed and reliability are greatly improved when ZFS is used instead. 16.04 LTS saw the first officially supported release of ZFS for Ubuntu and having just set up a fresh LXD host on Elastichosts utilising both ZFS and bridged networking, I figured it’d be a good time to document it.

In this article I’ll walk through the installation of LXD, ZFS and Bridge-Utils on Ubuntu 16.04 and configure LXD to use either a physical ZFS partition or loopback device combined with a bridged networking setup allowing for containers to pick up IP addresses via DHCP on the (v)LAN rather than a private subnet.

Before we begin

This walkthrough assumes you already have a Ubuntu 16.04 server host set up and ready to work with. If you do not, please download and install it now.

You’ll also need a spare disk, partition or adequate space on-disk to support a loopback file for your ZFS filesystem.

Finally this guide is reliant on the command line and some familiarity with the CLI would be advantageous, though the objective is to make this a copy & paste article as much as possible.

Part 1: Installation

To get started, let’s install our packages. They can all be installed with one command as follows:

sudo apt-get install lxd zfsutils-linux bridge-utils

However for this I will output the commands and the result for each package individually:

sudo apt-get install lxd

sudo apt-get install zfsutils-linux

sudo apt-get install bridge-utils

You’ll notice I’ve installed LXD, ZFS and bridge utils. LXD should be installed by default on any 16.04 host as is shown by the output above, however should there be any updates this will bring them down before we begin.

ZFS and bridge utils are not installed by default; ZFS needs to be installed to run our storage backend and bridge utils is required in order for our bridged interface to work.

Part 2: Configuration

With the relevant packages installed, we can now move on to configuration. We’ll start by configuring the bridge as before this is complete we won’t be able to obtain DHCP addresses for containers within LXD.

Setting up the bridge

Legacy ifupdown

We’ll begin by opening /etc/network/interfaces in a text editor. I like vim:

sudo vim /etc/network/interfaces

This is the default interfaces file. What we’ll do here is add a new bridge named br0. The simplest edit to make to this file is as follows (note the emphasis):

This will set the eth0 interface to manual and create a new bridge that piggybacks directly off it.

If you wish to create a static interface while you’re editing this file, the following may help you:

Following any edits, it’s a good idea to restart the interfaces to force the changes to take place. Obviously if you’re connected via SSH this will disconnect your session. You’ll need to have physical access to the machine/VM.

sudo ifdown eth0 && sudo ifup eth0 && sudo ifup br0

Modern netplan

We’ll begin by opening /etc/netplan/01-netcfg.yaml in a text editor. I like vim:

All of the above bolded lines have been added/modified for a static IP bridge. Edit to suit your environment and then run the following to apply changes:

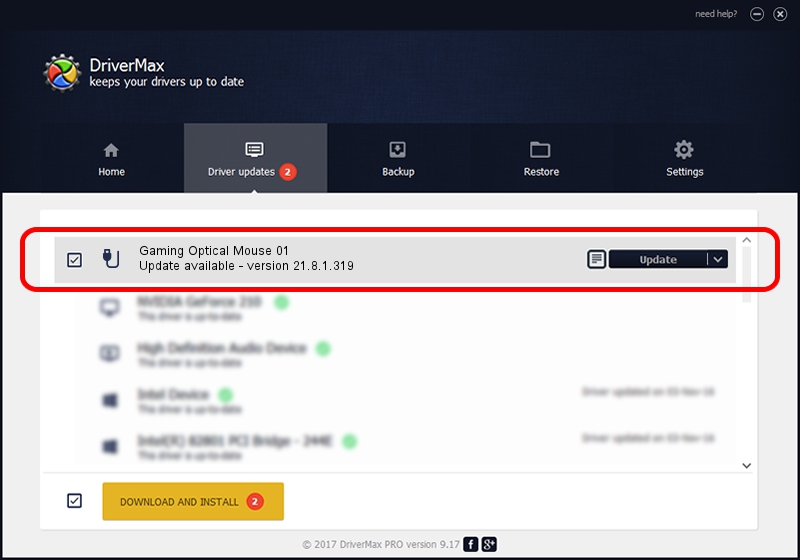

Download Lxd Drivers

sudo netplan apply

Running ifconfig on the CLI will now confirm the changes have been applied:

Configuring LXD & ZFS

With the bridge up and running we can now begin to configure LXD. Before we start setting up containers, LXD requests we run sudo lxd init to configure the package. As part of this, we’ll be selecting our newly created bridge for network connectivity and configuring ZFS as LXD will take care of both during setup.

For this guide I’ll be using a dedicated hard drive for the ZFS storage backend, though the same procedure can be used for a dedicated partition if you don’t have a spare drive handy. For those wishing to use a loopback file for testing, the procedure is slightly different and will be addressed below.

Find the disk/partition to be used

First we’ll run sudo fdisk -l to list the available disks & partitions on the server, here’s a relevant snippet of the output I get:

Make a note of the partition or drive to be used. In this example we’ll use partition sdb1 on disk /dev/sdb

Be aware

If your disk/partition is currently formatted and mounted on the system, it will need to be unmounted with sudo umount /path/to/mountpoint before continuing, or LXD will error during configuration.

Additionally if there’s an fstab entry this will need to be removed before continuing, otherwise you’ll see mount errors when you next reboot.

Configure LXD

Changes to bridge configuration

As of LXD 2.5 there have been a few changes. If installing a version of LXD under 2.5 please continue below, however for 2.5 and above in order to use the pre-configured bridge select No for Do you want to configure the LXD bridge (yes/no)? then see Configure LXD bridge (2.5+) below for details of adding the bridge manually after this.

Check the version of LXD by runningsudo lxc info.

Start the configuration of LXD by running sudo lxd init

Let’s break the above options down:

Name of the storage backend to use (dir or zfs): zfs

Here we’re defining ZFS as our storage backend of choice. The other option, DIR, is a flat-file storage option that places all containers on the host filesystem under /var/lib/lxd/containers/ (though the ZFS partition is transparently mounted under the same path and so accessed equally as easily). It doesn’t benefit from features such as compression and copy-on-write however, so the performance of the containers using the DIR backend simply won’t be as good.

Create a new ZFS pool (yes/no)? yesName of the new ZFS pool: lxd

Here we’re creating a brand new ZFS pool for LXD and giving it the name of “lxd”. We could also choose to use an existing pool if one were to exist, though as we left ZFS unconfigured it does not apply here.

Would you like to use an existing block device (yes/no)? yesPath to the existing block device: /dev/sdb1

Here we’re opting to use a physical partition rather than a loopback device, then providing the physical location of said partition.

Would you like LXD to be available over the network (yes/no)? no

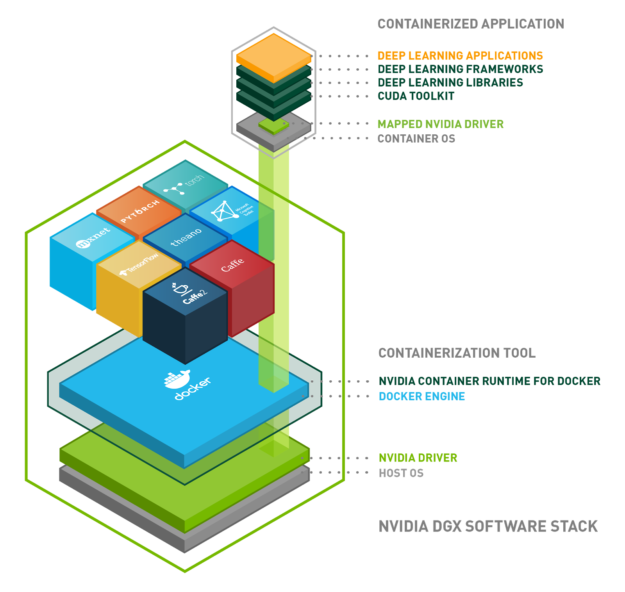

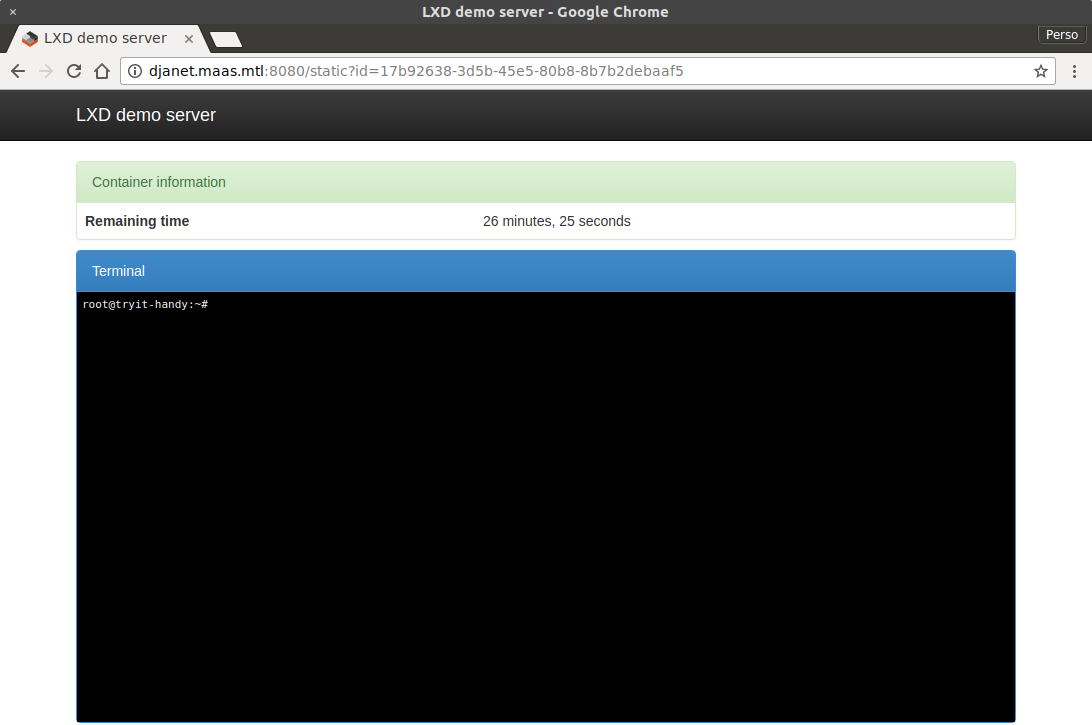

It’s possible to connect to LXD from other LXD servers or via the API from a browser (see https://linuxcontainers.org/lxd/try-it/ for an example of this).

As this is a simple installation we won’t be utilising this functionality and it is as such left unconfigured. Should we wish to enable it at a later date, we can run:

lxc config set core.https_address [::]lxc config set core.trust_password some-secret-string

Where some-secret-string is a secure password that’ll be required by other LXD servers wishing to connect in order to admin the LXD host or retried non-public published images.

Do you want to configure the LXD bridge (yes/no)? yes

Here we tell LXD to use our already-preconfigured bridge. This opens a new workflow as follows:

We don’t want LXD to create a new bridge for us, so we’ll select no here.

LXD now knows we may have our own bridge already set up, so we’ll select yes in order to declare it.

Finally we’ll input the bridge name and select OK. LXD will now use this bridge.

And with that, LXD will finish configuration and ready itself for use.

Configure LXD bridge (2.5+)

In version 2.5, the above purple bridge workflow has been retired in favour of the new lxc network command.

With lxd init complete above, add the br0 interface to the default profile with:

lxc network attach-profile br0 default eth0

If by accident the lxdbr0 interface was configured, it must be first detached from the default profile with:

lxc network detach-profile lxdbr0 default eth0

It’ll be obvious if this needs to be done as running lxc network attach-profile br0 default eth0 will result in the error error: device already exists.

With that complete, LXD will now successfully use the pre-configured bridge.

Configuring LXD with a ZFS loopback device

Run sudo lxd init as above, but use the following options instead.

The size in GB of the ZFS partition is important, we don’t want to run out of space any time soon. Although ZFS partitions may be resized, it’s better to be a little generous now and not have to worry about reconfiguring it later.

Increasing file and inode limits

Since it’s entirely possible we may in the future wish to run multiple LXD containers, it’s a good idea to already increase the number of open files and inode limits, this will prevent the dreaded “too many open files” errors which commonly occur with container solutions.

For the inode limits, open the sysctl.conf file as follows:

Download Lxd Driver Pc

sudo vim /etc/sysctl.conf

Now add the following lines, as recommended by the LXD project

It should look as follows:

After saving the file we’ll need to reboot, but not yet as we’ll also configure the open file limits.

Open the limits.conf file as follows:

sudo vim /etc/security/limits.conf

Now add the following lines. 100K should be enough:

It should look as follows:

Once the server is rebooted (this is important!) the new limits will apply and we’ll have future-proofed the server for now.

sudo reboot

Part 3: Test

With our bridge set up, our ZFS storage backend created and LXD fully configured, it’s time to test everything is working as it should be.

We’ll first get a quick overview of our ZFS storage pool using sudo zpool list lxd

With ZFS looking fine, we’ll run a simple lxc info to generate our client certificate and verify the configuration we’ve chosen for LXD:

It would appear the storage backend is correctly using our ZFS pool: “lxd”. If we now take a look at the default profile using:

lxc profile show default

We should see LXD using br0 as the default container eth0 interface:

Download Lxd Driver Download

Success! The only thing left to do now is launch a container.

We can use the official Ubuntu image repo and spin up a Xenial container with the alias xen1 using the command:

lxc launch ubuntu:xenial xen1

Which should return an output like this:

Now, we can use lxc list to get an overview of all containers including their IP addresses:

We can see the xen1 container has picked up an IP from our DHCP server on the LAN, which is exactly what we want.

Finally, we can use lxc exec xen1 bash to gain CLI access to the container we’ve just launched:

Conclusion

While a little long-winded, setting up LXD with a ZFS storage backend and utilising a bridged interface for connecting containers directly to the LAN isn’t overly difficult, and it’s only gotten easier as LXD has matured to version 2.0.

Are you brand new to LXD? I thoroughly recommend you take a look at LXD developer Stéphane Graber’s incredible LXD blog series to get up to speed.

ID, 'post_type' => $post->post_type ) ) ); if ($subpages 0 ) { ?-->